Not Able to Read the Data From Avrotable in Hive

Drilling into Large Information – Data Querying and Analysis (vi)

By Priyankaa Arunachalam, Alibaba Cloud Tech Share Writer. Tech Share is Alibaba Deject's incentive plan to encourage the sharing of technical knowledge and best practices within the cloud community.

In the previous commodity, we have discussed about Spark for big data and show yous how to set it up on Alibaba Cloud.

In this blog series, we will walk you through the basics of Hive, including table creation and other underlying concepts for big data applications.

"Our power to do cracking things with data will make a real difference in every aspect of our lives," Jennifer Pahlka

There are unlike ways of executing MapReduce operations. First is the traditional approach, where nosotros utilize Java MapReduce plan for all types of data. The second approach is the scripting approach for MapReduce to process structured and semi-structured data. This approach is achieved by using Sus scrofa. And then comes the Hive Query Language, HiveQL or HQL, for MapReduce to process structured information. This is achieved by Hive.

The Case for Hive

Every bit discussed in our previous article, Hadoop is a vast array of tools and technologies and at this signal, information technology is more convenient to deploy Hive and Pig. Hive has its advantages over Pig, especially since it tin make data reporting and analyzing easier through warehousing.

Hive is built on top of Hadoop and used for querying and analysis of data that is stored in HDFS. It is a tool which helps programmers analyze large data sets and access the data hands with the help of a query language chosen HiveQL. This language internally converts the SQL-like queries into MapReduce jobs for deploying information technology on Hadoop.

Nosotros also accept Impala at this standpoint, which is quite normally heard along with Hive, but if you picket keenly, Hive has its own space in the market identify and hence it has ameliorate support too. Impala is too a query engine congenital on meridian of Hadoop. It makes employ of existing Hive as many Hadoop users already take it in place to perform batch oriented jobs.

The main goal of Impala is to make fast and efficient operations through SQL. Integrating Hive with Impala gives users an advantage to employ either Hive or Impala for processing or to create tables. Impala uses a language called ImpalaQL which is a subset of HiveQL. In this article, we will focus on Hive.

Features of Hive

- Hive is designed for managing and querying simply structured data

- While dealing with these structured data, Map Reduce doesn't have optimization features like UDFs but Hive framework tin can assist y'all amend in terms of optimizations

- The complexity of Map Reduce programming can be reduced using Hive as it uses HQL instead of Java. Hence the concepts are like to SQL.

- Hive uses partitioning to amend performance on certain queries.

- A significant component of Hive is Metastore which resides in a relational database.

Hive and Relational Databases

Relational databases are of "Schema on Reaad and Schema on Write", where functions like Insertions, Updates, and Modifications tin be performed. Past borrowing the concept of "write in one case read many (WORM)", Hive was designed based on "Schema on Read only". A typical Hive query runs on multiple Information Nodes and hence it was tough to update and modify data across multiple nodes. But this has been sorted out in the latest versions of Hive.

File Formats

Hive supports diverse file formats like the flat Files or text files, SequenceFiles, RC and ORC Files, Avro Files, Parquet and custom input and output formats. Text file is the default file format of Hive.

Storage options in Hive

- Metastore: Metastore is a major component to wait at, that keeps a track of all the metadata of database, tables, datatypes, etc.

- Tables: There are two dissimilar types of tables available in Hive. They are normal tables and external tables. Both are similar to common tables in a Database just the word EXTERNAL lets you create a tabular array and provide a LOCATION so that Hive does non use the default location.

Another departure is that when the external table is deleted, you withal have the data residing in HDFS. On the other manus, the data in the normal table gets deleted on deleting the table. - Partitions: Sectionalization is slicing the tables, which are stored in unlike subdirectory inside a table'southward directory. This helps in boosting the query performance particularly in cases like select statements with "WHERE" clause.

- Buckets: Buckets are hashed partitions and they assist in accelerating the speed of joins and sampling of information. To accomplish this bucketing concept in hive, use clustered past command while creating a table. Each bucket will be stored every bit a file under the Table directory. At the time of table cosmos, fix the number of buckets. Based on the hash value, the data will exist distributed across various buckets.

Hive SerDe

Serializer/Deserializer in Hive or Hive SerDe is used for the purpose of IO which can handle both serialization and deserialization in Hive. There are different types of SerDe like native SerDe and custom SerDe with which you tin create tables. If the ROW FORMAT is not specified, then use native SerDe. Apart from different types of SerDe and nosotros tin also write our own SerDe for our ain data formats. At this initial stage, we will merely get familiar with this concept, as it is something of import to concentrate in Hive.

To recollect, whatever file you write to HDFS, information technology is just stored equally a file over at that place. Here comes Hive, which tin impose construction on dissimilar information formats. The points beneath elaborates on initiating a hive trounce, its usage and some basic queries to offset with and sympathise the working of hive.

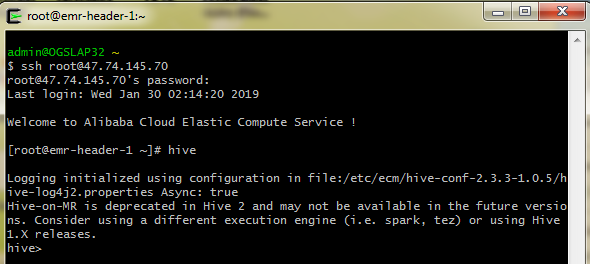

Initiating Hive Shell

Log in to the Alibaba Cloud main Rubberband Compue Service (ECS) Case, and merely type "hive" which leads to a screen as shown below. If you have configured Hive to run on a dissimilar worker node, rather than the master, then login to that particular host and open up the hive shell.

Permit's look through some basic queries in HiveQL. The very first is creating a table in Hive.

Syntax

CREATE TABLE [IF NOT EXISTS] table_name [(col_name data_type [Comment col_comment], ...)] [COMMENT table_comment] [ROW FORMAT row_format] [STORED Every bit file_format] Output

OK Fourth dimension taken: 5.905 seconds In our example, let's endeavour creating a table with the columns nowadays in the tripadvisor_merged sheet.

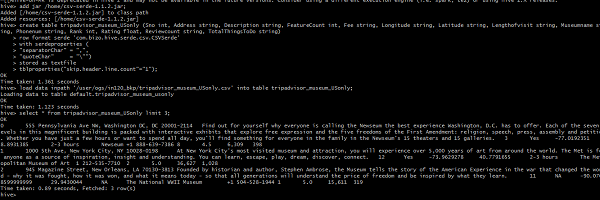

create table tripadvisor_museum_USonly (Sno string, Address string, Description string, Fee string, Longitude string, Latitude string, Lengthofvisit string, Museumname cord, Phonenum string, Rank cord, Rating bladder, Reviewcount string, TotalThingsToDo string, Land string, State string, Rankpercentage string, Art_Galleries string, Art_Museums string, Auto_Race_Tracks cord, Families_Count string, Couple_Count string, Solo_Count cord, Friends_Count string) row format serde 'com.bizo.hive.serde.csv.CSVSerde' with serdeproperties ( "separatorChar" = ",", "quoteChar" = "\"") stored as textfile tblproperties ("skip.header.line.count"="1");

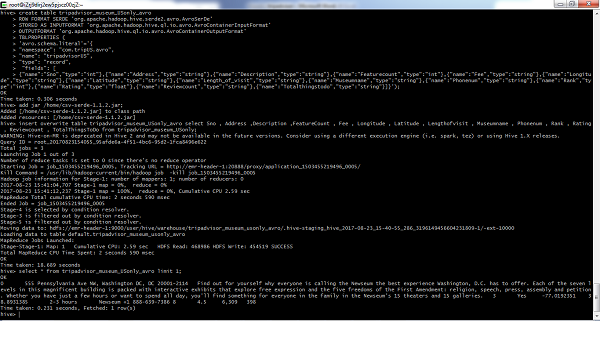

For the aforementioned case, let'due south attempt creating an Avro table, as we accept been speaking about this file format in the entire web log serial for its all-time performance.

create table tripadvisor_museum_USonly_avro ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.avro.AvroSerDe' STORED As INPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.avro.AvroContainerOutputFormat' TBLPROPERTIES ( 'avro.schema.literal'='{ "namespace": "com.trip.avro", "proper noun": "tripadvisor", "type": "record", "fields": [ {"proper name":"Sno","type":"string"},{"proper name":"Address","type":"string"},{"proper noun":"Description","blazon":"string"},{"name":"FeatureCount","type":"cord"},{"proper name":"Fee","type":"string"},{"proper name":"Longitude","type":"string"},{"name":"Breadth","type":"string"},{"name":"Length_of_visit","type":"string"},{"proper noun":"Museumname","type":"cord},{"name":"descri_sub","type":"string"}]}'); Now let's insert the data into the Avro tabular array using insert statement. Below is a sample insert query for Avro table.

insert overwrite table tripavro select Sno, Address, Description, FeatureCount, Fee, Longitude, Latitude, Lengthofvisit, Museumname, descri_sub from tripadvisor_museum_USonly; Similarly create all columns for the columns in the file and create a corresponding insert argument to insert the data into the created tables

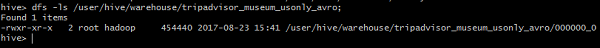

Once done, list the folder to view it

Load Data

Generally, after creating a table in SQL, we used to insert data using the Insert statement. But in Hive, nosotros tin can insert an entire dataset using the "LOAD Data" statement.

Syntax

LOAD Data [LOCAL] INPATH 'filepath' [OVERWRITE] INTO Tabular array tablename ; Using OSS in Hive

To use Alibaba Deject Object Storage Service (OSS) every bit storage in Hive, create an External table as follows

CREATE EXTERNAL Tabular array demo_table ( userid INT, proper noun STRING) LOCATION 'oss://demo1bucket/users'; For example, write a script for creating an external table and save it as hiveDemo.sql. Once done, upload it to OSS

USE DEFAULT; ready hive.input.format=org.apache.hadoop.hive.ql.io.HiveInputFormat; gear up hive.stats.autogather=simulated; CREATE EXTERNAL Tabular array demo_table ( userid INT, name STRING) ROW FORMAT DELIMITED FIELDS TERMINATED Past '\t' STORED AS TEXTFILE LOCATION 'oss://demo1bucket/users'; Create a new task in E-MapReduce based on the following configuration

-f ossref://${bucket}/yourpath/hiveDemo.sql Specify the saucepan name in "${bucket} and mention the location where you take saved the hive script in "yourpath"

When Is Hive Not Suitable?

- Hive may non be the best fit for OnLine Transaction Processing (OLTP)

- Real-time queries

- Hive is non designed for updates at row level

All-time Practices

- Use Partitioning to ameliorate query performance

- If the saucepan key and join keys are common, then bucketing can assist improve the join performance

- Selecting an input format plays a disquisitional office which we are talking about in all our articles. Hive is not an exception in this aspect. Choosing advisable file format tin can make Hive performance better

- Sampling allows users to take a subset of dataset and analyze it, without having to struggle with the entire data set. Hive offers a built-in TABLESAMPLE clause which allows sampling of tables

-

-

Alibaba Clouder

2,630 posts | 662 followers

Follow

Yous may too like

Comments

-

-

Alibaba Clouder

two,630 posts | 662 followers

Follow

Related Products

-

E-MapReduce Service

E-MapReduce Service A Big Data service that uses Apache Hadoop and Spark to procedure and analyze information

Learn More -

MaxCompute

MaxCompute Deport big-scale data warehousing with MaxCompute

Learn More than -

ECS(Elastic Compute Service)

ECS(Elastic Compute Service) An online computing service that offers elastic and secure virtual deject servers to cater all your deject hosting needs.

Learn More

Not Able to Read the Data From Avrotable in Hive

Source: https://www.alibabacloud.com/blog/drilling-into-big-data-data-querying-and-analysis-6_594662